Speech AI is a technology that facilitates communication between a computer system and a human using voice. Think of technology like Siri and Alexa. Speech AI technology makes these voice assistants possible by bridging the gap between what we say and how computers process that language.

Aside from popular voice assistants, speech AI is an essential tool in many industries, enabling us to work more efficiently, accurately, and easily. Speech AI can be used in many fields, such as healthcare, aviation, the automotive industry, and many more. Using only the power of our voice, technology can become more easily accessible for more people, transforming the way we work.

This blog post will examine speech AI and its capabilities, the technology that enables it to work the way it does, applications, challenges, and more. We’ll also look at how aiOla uses AI and speech technology to help industries boost productivity and simplify mission-critical tasks.

How Does Speech AI Work?

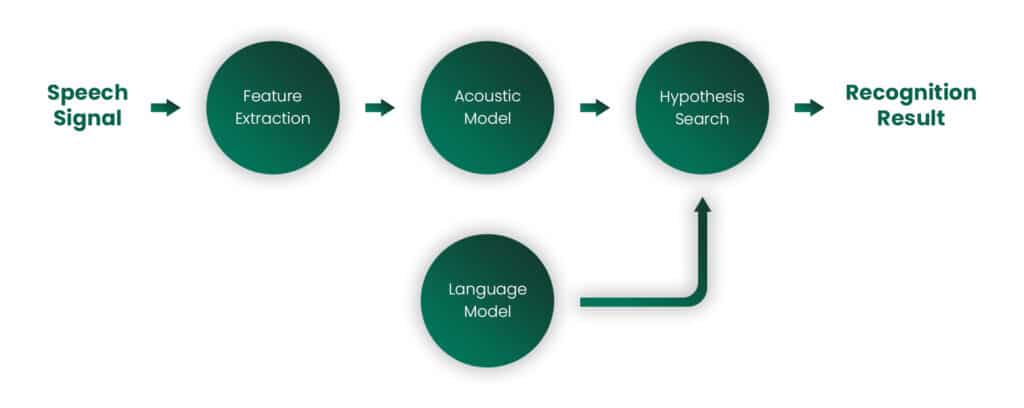

Automatic speech recognition (ASR) technology enables a computer program to convert human spoken words into text. Voice recognition, by contrast, mainly focuses on identifying a specific individual or user’s voice, whereas speech recognition processes verbal speech to a text output.

ASR is a foundational component of speech AI and also uses another element, natural language processing (NLP) and natural language understanding (NLU) to turn the converted text into action. While ASR simply converts speech to text, NLP technology parses the meaning behind the words by assessing context, syntax, and semantics. NLP technology can extract important information from text and interpret its intent.

Certain advanced NLP models based on machine learning (ML) allow speech AI systems to handle complex language tasks, like deciphering context, sentiment, or even generating human-like responses. Machine learning algorithms learn from extensive training data, including diverse sets of audio samples in different languages, accents, or speech styles. This enables the ML algorithm to adapt to the intricacies of human speech patterns. Therefore, the better the quality and diversity of training datasets, the stronger and more proficient speech AI technology can become.

When used together, ASR, NLU, NLP, and ML can power a wide variety of applications with countless use cases, revolutionizing the way we work and interact with technology through natural language.

Applications of Speech AI

Speech AI can be applied to many different industries and domains, some of which help improve the way we work, while others can improve the way we live and interact with the world and technology around us. These use cases will only continue to grow and become higher in demand as the market share for voice and AI speech recognition solutions continues to increase. In 2022, the market size was valued at USD 17.7 billion, expected to grow at a compound annual growth rate (CAGR) of 14.9% by 2030. Here are some ways speech AI impacts different aspects of daily life.

Virtual Assistants

It’s projected that by 2026, there will be over 150 million voice assistant users in the US alone. This shouldn’t come as a surprise as speech AI powers the tools we use every day, like Amazon’s Alexa, Siri, and Google Assistant. With these voice assistants, users can perform tasks, like setting reminders or alarms, asking questions, or getting information. Not only that, but these voice assistants are in our pockets, integrated into mobile devices like smartphones and smart speakers, making them accessible at any time and changing the way we complete tasks or search the internet.

Speech-to-Text and Text-to-Speech Technology

Speech-to-text technology converts speech into written text for various purposes, such as transcription, taking notes, or hands-free operation. Text-to-speech, on the other hand, converts text into spoken words, fueling applications for audiobooks, voice-guided navigation systems, and accessibility for the visually impaired.

Business Speech Analytics

Speech AI technology has the ability to analyze and extract insights from voice. This is particularly useful in customer service scenarios, where businesses can extract insights on customer interactions over the phone to better gauge customer sentiment and improve service quality. Speech AI can power interactive voice response (IVR) systems, helping customer service teams automate incoming requests and better direct customer inquiries. According to research by McKinsey, the combination of speech data with other customer data can show the full context of a call and reveal opportunities for improvements in the customer experience.

Accessibility for Differently Abled Individuals

The ability to turn text into speech or vice versa makes technology accessible to more people, particularly those with mobile, visual, or hearing impairments. Voice recognition helps people interact with devices, access information, and perform tasks using only voice commands, making digital experiences more inclusive.

Challenges and Limitations of AI Speech Recognition Technology

While speech AI technology has made significant advances in recent years, it’s not infallible or without its challenges. Some limitations arise when considering speech AI’s usability in certain professional contexts, as well as its capabilities based on the datasets it’s trained on. While machine learning can be based on very advanced datasets, the concern is that it still won’t be as all-encompassing as necessary to facilitate speech detection in certain environments. Let’s take a closer look at some areas where speech AI can potentially fall short.

Accuracy and Understanding of Linguistic Nuances

When it comes to speech, accuracy can be challenging. According to the Benchmark report on the accuracy of Speech AI models, Google’s accuracy level was around 83% and AWS’s almost 90%. While this may seem high, in specific scenarios where sharp accuracy can make all the difference, like healthcare, these discrepancies can make a system unreliable. With so many accents, languages, and industry-specific jargon, it can be difficult for a system to pick up on the slight contextual nuances in speech. Not only that, but when we use sarcasm, slang, or subtle variations in our speech or tone, it can affect the accuracy of what a speech AI platform picks up, leading to ambiguous results and outputs.

Privacy Concerns in Voice Data Collection

When voices are collected and parsed for data, it brings up compliance and privacy concerns, especially in business contexts. According to a report by Microsoft, 41% of users are concerned about privacy, trust, and passive listening. As these technologies become more prevalent, the risk of unauthorized access increases. Additionally, the misuse of voice data for malicious purposes like surveillance or accessing confidential data can be a tricky challenge to navigate.

Multilingual and Dialectal Challenges

Speech AI systems can have a tricky time accurately interpreting languages with various accents of regional variations, making it challenging to ensure that the technology is efficient. Unique linguistic features such as regional dialects or even users speaking more than one language simultaneously can present a challenge.

Integration with Environmental and Ambient Noise

Certain sounds like ambient noise and environmental factors can affect the performance of speech AI platforms. For example, background noise in crowded areas, interference from other audio sources, or variations in audio quality can all lead to inaccuracies. Additionally, when used in business situations, such as manufacturing or fleet management, there are always external noises, which can interrupt speech recognition or understanding.

Looking Ahead: Future Trends in Speech AI

According to Deepgram’s State of Voice Technology 2023 report, 79% of companies surveyed reported up to a 50% increase in revenue and up to 99% reported gains in productivity following the adoption of speech technology. This trend shows the huge impact speech AI is already making in different industries.

As technology advances and more research is done into the capabilities of AI speech recognition tools, speech AI will inevitably evolve even further. We’ll likely see the integration of speech AI tools in various applications, allowing for further efficiencies in both personal and professional settings. Looking ahead, here are a few trends we’re expecting to see develop further in speech AI technology.

Advancements in Voice Synthesis

Additional developments in voice synthesis will eventually lead to more natural, human-like voices. Improvements in intonation and expressiveness will make synthetic voices seem nearly indistinguishable from real human ones. These advancements will lead to a better user experience with technologies like audiobooks, voiceovers, as well as virtual assistants.

Improved Contextual Understanding

With advances in ASR, NLU, and ML technologies, the contextual understanding of speech AI systems will only improve. These systems will be more efficient when it comes to grasping nuances in conversations and better understanding a speaker’s intent, inferring more accurate meaning from voice cues. In the future, this will allow speech AI to contribute to more natural and engaging interactions between man and machine.

Integration with Emerging Technologies

We’ll likely see other types of AI across different fields integrate with speech AI platforms to deliver more sophisticated and versatile systems. For example, AI robotics, when paired with advanced speech recognition solutions that can communicate with humans, leading to a wider field of use cases in industries like healthcare, customer service, manufacturing, and more.

Ethical Considerations and Regulations

While the above trends aren’t the only way speech AI is projected to evolve, they do bring up some considerations surrounding ethics and regulations. Any of these or other future trends in speech AI are likely to be focused heavily on ethical considerations and regulations surrounding their development to ensure the technology is used intentionally and fairly. This can include:

- Addressing biases in training data and trustworthiness in AI

- Ensuring transparency in how speech AI systems operate and store data

- Implementing mechanisms for user consent and control over their voice data

- Introducing regulations to govern data privacy, security, and ethical use of speech AI in sensitive fields like finance and healthcare

aiOla: The Future of Speech AI in Critical Industries

aiOla is an AI-driven speech platform that is pioneering how businesses can harness the power of speech to complete mission-critical tasks. With aiOla, businesses can become more efficient and productive by cutting down on time spent on manual tasks such as inspections and maintenance. Additionally, aiOla’s platform can identify essential data through voice that would otherwise be lost with a high level of accuracy, enabling businesses to make more informed decisions.

aiOla’s speech platform can:

- Understand over 100 languages including dialects, accents, and industry-specific jargon

- Cut down on manual operations by up to 90% enabling employees to focus on other more creative tasks

- Reduce human error by making inspections more efficient and standardized

- Monitor essential components of a business, such as fleet vehicles or food manufacturing machinery, allowing for more frequent and accurate assessments

- Improve production time by up to 30% by replacing time spent on manual, repetitive tasks

aiOla’s speech platform can be used in a variety of fields, such as fleet management, aviation, manufacturing, food safety, and more. In these industries, accuracy and efficiency are of paramount importance. Not only does aiOla allow teams to reduce time spent on inspections and manual tasks, but it also reduces the risk of error, ensuring longer uptime and a greater rate of productivity.

Speech AI: An Essential Tool

Speech AI is essential to current work demands in many industries. With a great emphasis on productivity, efficiency, and accuracy in industries across the board in order to meet stringent consumer demands and a high level of quality, speech AI becomes a critical component for success. Reducing downtime and removing the need for manual tasks and the resources associated with it allows for the reallocation of time and budget to more profitable and creative initiatives.

In this sense, the impact of speech AI on industries like manufacturing, aviation, food safety, and others is not only significant but potentially transformative. Systems like aiOla enable companies in these fields and others to make giant strides toward advancements in production, quality control, and customer satisfaction.

Book a demo with one of our experts to see how aiOla’s speech AI can transform your business.