Artificial intelligence technology is being quickly adopted into various workplaces, helping us optimize work processes, cut down on costly resources, and streamline operations. While AI technology has the potential to drastically improve our jobs and even revolutionize specific industries, it doesn’t come without concerns. As AI systems become more prevalent and influential, a fundamental question arises: Can we trust them?

From AI assistants to self-driving cars, as these intelligent systems become increasingly complex and autonomous, concerns about transparency, fairness, privacy, and accountability arise. The need for a comprehensive AI trustworthiness framework has never been more urgent.

In this blog post, we’ll examine trustworthiness risks in AI technology and take a look at some potential solutions, including speech-driven AI systems like aiOla that are able to overcome most risks.

How are Companies Establishing AI Trustworthiness?

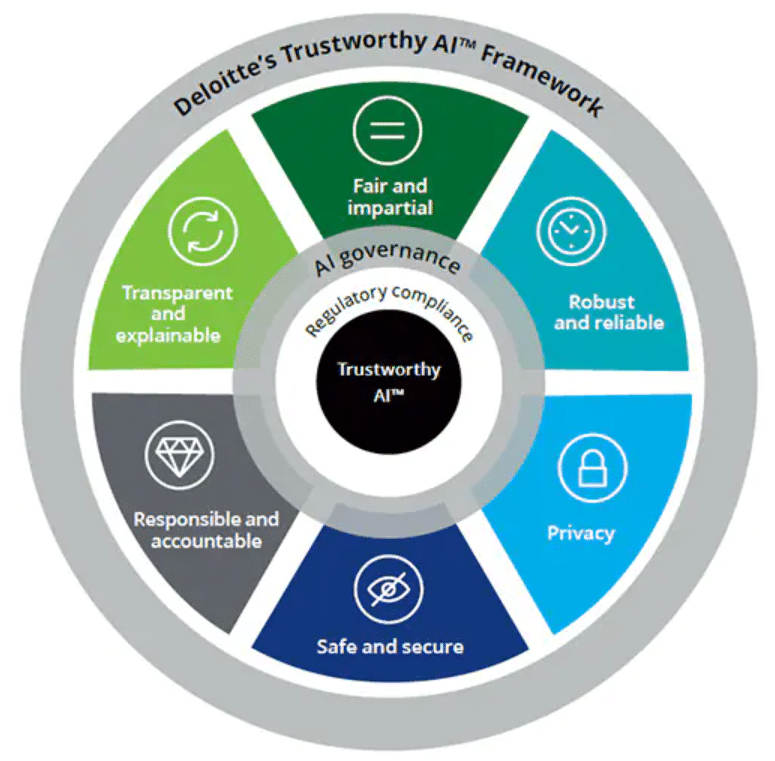

In order to contend with different ethical challenges related to AI, some companies have started to establish trustworthiness AI frameworks to outline how they can mitigate potential AI risks. These frameworks often address different concerns that arise at different stages of the AI lifecycle. Some frameworks are based on the OECD AI Principles, while others are more tailored. Here’s an overview of what Deloitte’s trustworthiness AI framework looks like:

Deloitte’s framework divides trustworthiness into six categories and helps the company develop, design, and deploy AI systems that are compliant with each of their designated categories. Companies with an established trustworthiness AI framework need to decide whether the benefits of a specific AI tool outweigh the potential risks.

Trustworthiness Risks in AI

Trustworthiness, or a lack thereof, in AI comes from different factors. The inputs used to train AI models can be unreliable, there may be privacy or security issues, and the impact on our existing workforce makes it a contentious tool to begin with. To better understand current AI concerns, let’s take a look at some risks specifically surrounding AI’s trustworthiness.

Toxic Content

Toxicity in natural language is defined as comments or text that is rude, unreasonable, or disrespectful and would likely make someone leave a conversation. This becomes an issue in NLP applications that use AI to process and generate text, such as chatbots, translation systems, or even sentiment analysis tools. Language and AI models are trained on vast amounts of data and don’t differentiate between toxic and non-toxic language, meaning AI can pick up these tendencies and create toxic content. In fact, according to the Artificial Intelligence Index Report 2023, researchers have found that models trained on web data were more likely to produce toxic content than their smaller counterparts.

Biases

AI is trained by humans, and in the training process, there is always the chance that our own biases may get in the way of an entirely neutral training experience. When using AI systems for things like hiring, screening candidates, lending, healthcare, or a number of other processes, this can have real-world implications that lead to inequality and reinforce existing social biases. According to the same AI report, certain generative AI models such as text-to-image generators often exhibited gender bias, and even language models that perform better on fairness benchmarks still displayed similar gender biases.

Copyright Infringement

Many generative AI models have been trained on existing works, posing an ethical issue when it comes to the copyright of who owns the work AI has produced. Not only that, it many cases, the work used to train AI models wasn’t obtained with consent, leading to much criticism and even potential legal issues. Midjourney, a generative AI image model, has found itself the subject of a class-action lawsuit for copyright infringement. Its system is trained on human-generated images and artworks that were used without consent, credit, or compensation.

Impact on labor market

According to The Future of Jobs 2023 report by the World Economic Forum, a quarter of all jobs are set to change in the next five years due to advancements in AI technology. Certain roles are more likely to be impacted than others. For example, it’s predicted that there will be 26 million fewer administrative and record-keeping jobs by 2027 as these tasks can be automated by AI models. Granted that AI technology is advancing at breakneck speed, many industries are weary of this technology’s trustworthiness as some presume it will replace them in their jobs.

Hallucination Effect

One of the risks with generative AI systems or large language models (LLMs) is that when they don’t understand or misinterpret a prompt, they produce outputs containing false information. These outputs don’t match the algorithm they were trained on or any identifiable pattern. This is referred to as Artificial Intelligence Hallucination. Some experts speculate this can happen when an AI model is fed misleading data, such as fake news. Regardless, the repercussions can have a significant impact. At Google’s Bard launch, the AI incorrectly answered a question in a demo, resulting in the company’s shares tumbling and the loss of about $100 billion in market value.

Overcoming Trustworthiness Risks with AI Speech Tech

In order to mitigate the risks associated with AI trustworthiness, it’s crucial to implement robust filtering and validation procedures. Additionally, ensuring responsible deployment of AI systems by including monitoring processes and algorithms based on high-quality data can also help reduce opportunities for these risks to arise.

That said, the risks mentioned above aren’t always applicable to all types of AI technology. Automatic speech recognition (ASR), for example, uses different types of inputs than generative AI, making it less susceptible to certain risks like toxic content or copyright infringement. Since data is gathered through speech recognition, the input is constrained, making it a much safer technology.

At aiOla, our AI-powered speech recognition system models rely on input from everyday speech to generate powerful data that helps companies make informed business decisions. Since data is based on speech and not entirely on data, patterns, or algorithms from training models, the output is less risky.

Moreover, at aiOla, we’re focused on generating a report or a structured output from data gathered through speech. In this case, risks are reduced on several fronts:

- There’s no possibility for copyright infringement since the output is produced from real speech and not trained using existing content

- There’s a lower chance of built-in biases as output is based on real data gathered through speech, meaning it’s more reflective of reality

- The hallucination effect isn’t as much of a concern with aiOla since the output text from ASR is based on structured operations that are designed to be fixed.

- This model cannot generate toxic content on its own

- Speech-powered AI models such as aiOla aren’t meant to replace humans in the workplace, but rather enhance their abilities, leading to far less job insecurity

aiOla: Boost Your Business with Speech-powered AI

Aside from sidestepping the aforementioned risks through AI-powered speech technology, aiOla helps companies achieve a lot more with its language-based platform. With aiOla, companies can increase efficiency, reduce downtime, improve safety standards, and more. Here’s a look at how:

- aiOla enables companies in different industries to increase efficiency and reduce time and resources spent on manual tasks, like inspection. For example, in the food safety industry, inspectors can use aiOla to verbally work through inspections while the technology captures critical information such as machinery operations, storage conditions, factory line production, and more

- In addition to inspections, workflows such as inventory control, logistics, quality control, and packaging can be streamlined in order to minimize disruptions and improve accuracy

- Since aiOla is entirely powered by speech, the system can be used hands-free, enabling your workforce to focus on tasks and fostering a safer work environment that doesn’t require them to divide their attention

- With aiOla, companies can extract meaningful data through speech. The technology understands over 100 different languages as well as accents and dialects and can comprehend industry-specific jargon and terminology

Put Trustworthiness Back in Your AI Processes with aiOla

As AI technology continues to grow in more industries and businesses, companies will have a responsibility to ensure a model’s trustworthiness. Since different AI models operate on different algorithms and structures, not every system will require as much rigorous monitoring. As we’ve seen here, generative AI models are a lot more open to risks than platforms based on ASR, like aiOla.

Book a demo today to see how aiOla can work for your company.